GBDT

Advantage: The advantage of GBDT is that it achieve great performance. 对数据特征尺度不敏感,自动填补确实特征,可做特征筛选,效果较为突出。 Key word: Regressor Usage: A regressor to forecast different labels or values

Great Explanation

GBDT Algorithms: Principles - Develop Paper

Watch chapter 3 for formulation

Core idea

Whether weak learners could be modified to become better.

Logic Flow

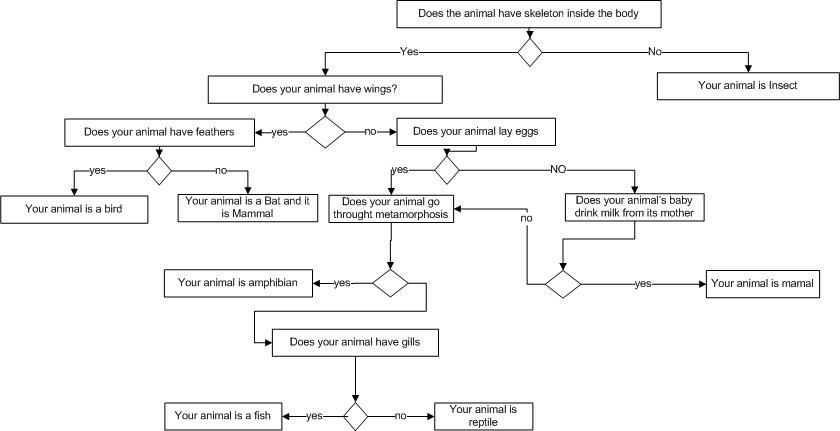

Decision trees

Decision trees can be modeled like this:

By constantly switching from different decisions, finally output something that help make decisions, let’s say forecast.

Ensemble learning

Using decision trees is a way to make decisions by calculating the residual of each leaf and update the prediction result. However, using a decision tree may overfit rather quickly and lack the ability of generalization. As a result, they want to combine lots of decision trees for learning.

Random forest

One way is to use random forest that combines the weighted sum of prediction accuracy of different decision trees. This could be run in parallel. But not weighted sums

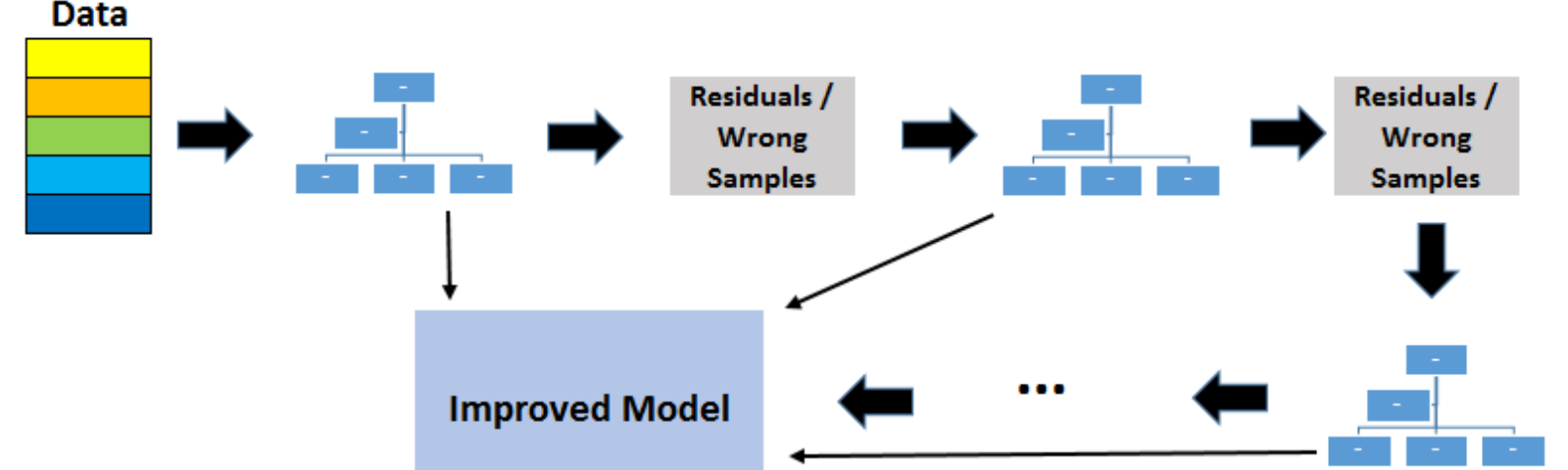

Gradient boosting decision trees

Combine many weak learners to form a strong learner. The way it forms a strong learner could be seen as the following.

The process of updating the decision trees could be described as the following.

- Using loss function and gradient descent method with first decision tree.

- Error residuals for first decision tree: e1 = y - y_prediction1, which is e1_predicted.

- Prediction for second decision tree is y_prediction2 = y_prediction1 + e1_predicted.

- Residual of the second decision tree is e2 = y - y_prediction2. which is e2_predicted.

- Repeat the process and update the residuals sequentially.

Following the previously mentioned procedures, reach a final state and terminates.